New version of Blickshift Analytics released

Today is a great day for the eye tracking community. Today we are releasing the new version of Blickshift Analytics. And with it, two new fantastic improvements that will make the daily work of eye tracking researchers much easier. It will make your eye tracking data analysis much faster and more accurate, and will allow you to capture a higher level of detail in your results. And all in all, by reducing the time it takes to complete a comprehensive analysis.

So let’s take a look at the two key highlights.

Number one: Dynamic AOIs powered by Artificial Intelligence

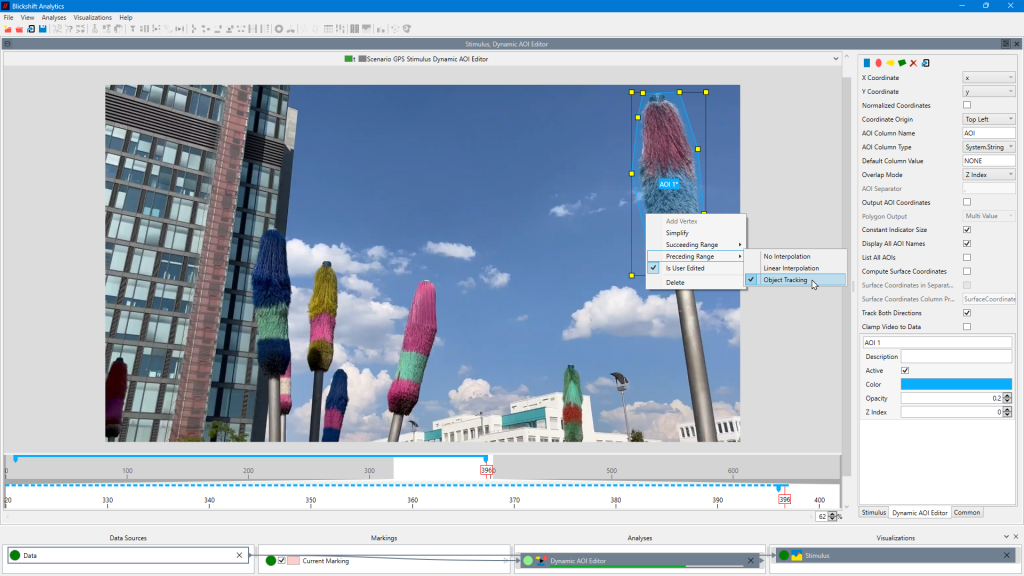

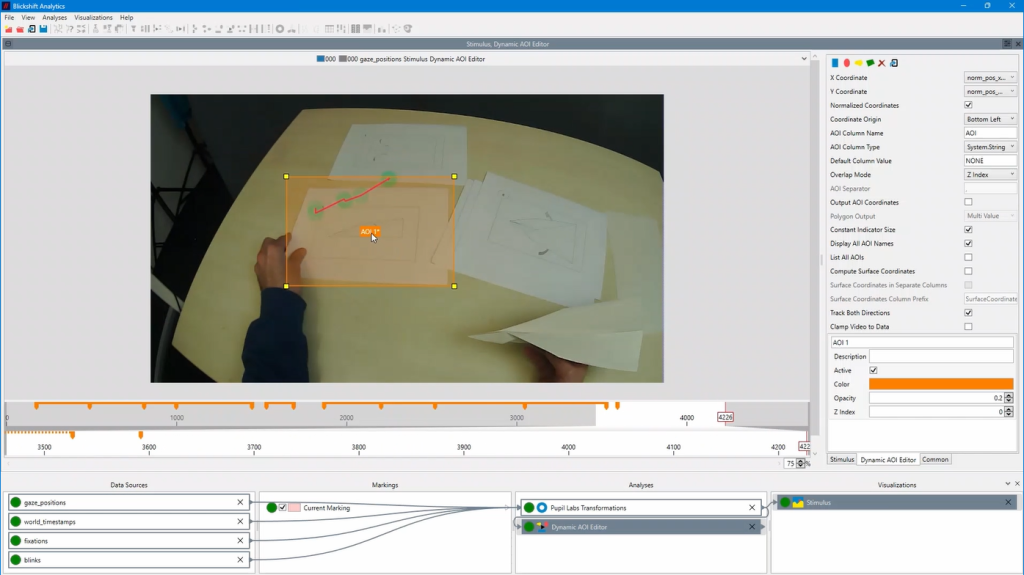

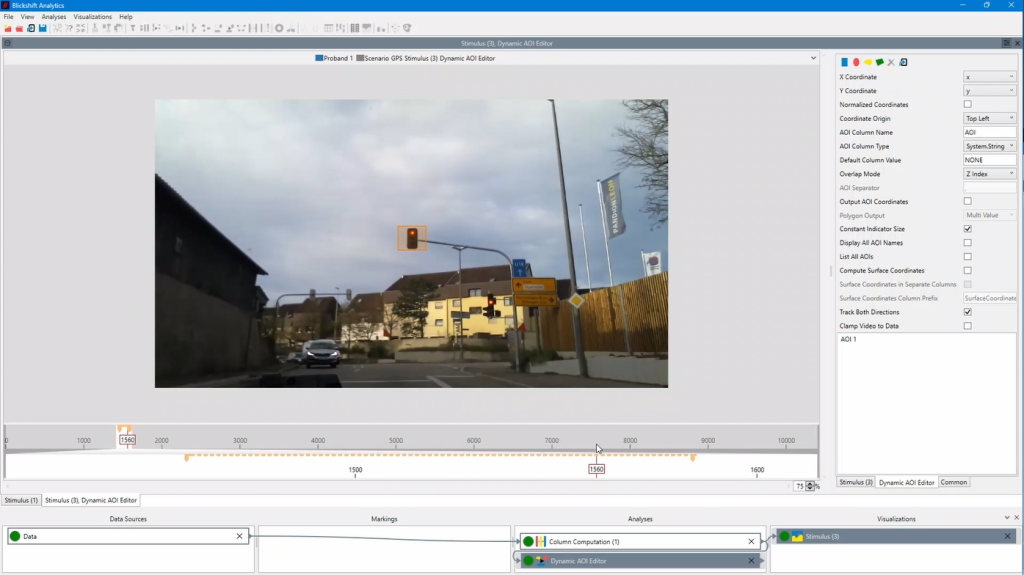

Completely new dynamic AOI editor with options for interpolating the AOI over time or for tracking in the video.

Until now, dynamic AOIs have been a complicated topic in eye tracking analysis, especially when analysing data from head-mounted eye trackers. In fact, there have been many different approaches to dealing with dynamic areas of interest. But all of them had disadvantages and led to compromises. Let’s go through them and finally present our solution in comparison to the existing ones:

1.) A standard approach was to go through the experiment fixation by fixation and map the fixation to an AOI identifier. This approach was accurate but very time consuming due to a lot of manual mouse clicking.

2.) The use of markers accelerated the mapping process, but the disadvantage was that the marker images were in the participants’ field of view, which could lead to deviations in visual perception. This method also had problems in difficult light conditions, for example in driving simulators.

3.) You could take a picture of a stimulus and let a computer vision algorithm map the fixation on the stimulus from the participant’s perspective. In dynamic scenes, this approach cannot be used.

4.) Finally, you could develop your own computer vision solution. However, not everyone is an expert in CV and it takes a lot of time to implement the code.

So there was no perfect solution. And that is unsatisfactory for us. Since it is part of our mission to provide you with the perfect solution, we thought about how we could really change the situation. As early as the summer of 2018, we started our first research in the field of dynamic AOIs. Unfortunately, the algorithms we wanted to use were not satisfactory enough at that time. In the end, it took us five years to develop the best solution for you. And, yes, the results are amazing! You can watch a short demo in our YouTube channel.

Our dynamic AOI editor overcomes all of the above drawbacks and is one of the most fascinating components we have ever brought out of our development lab!

It works in many different scenarios. From classic desktop setups with videos, websites, PC programs or console games as stimuli, to indoor recordings like in a lab, to outdoor experiments like in sunny environments, in the forest or in a car. As difficult light scenarios were also on our list of requirements, we can now offer you a solution for this as well. In addition, VR and AR setups can also benefit from computer vision-based AOI detection, for example when there is no access to the 3D model. Our experience during the long beta test has shown that you can significantly reduce data preparation time and create analysis setups that were previously not possible at all.

Dynamic AOIs in a usability experiment with a head-mounted eye tracker

Dynamic AOIs in an automotive experiment with a fixed eye tracking device

Number two: Our brand new statistic component

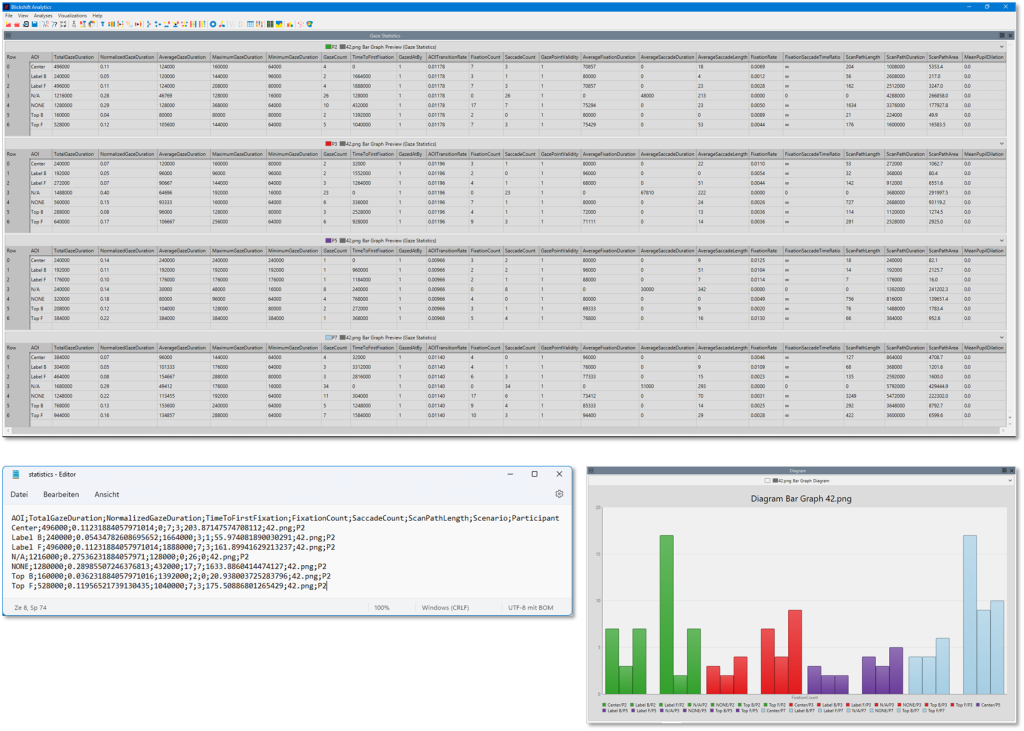

The new Gaze Statistics node contains more than 20 additional statistics for fixations, saccades, scan paths and pupil dilation, depending on the existing base data.

Finally, we would like to say a few words about the new statistics component. Traditionally, eye tracking researchers have had to use existing R or Python scripts and rely on the quality of the code, or develop their own workflow to obtain eye tracking metrics using tools such as R, other stats software or even spreadsheet apps. That’s all a thing of the past. With our new statistics component, you can have Blickshift calculate up to 20 standard eye tracking metrics right out of the box. Once calculated, you can either visualize the results directly in our software with a graph, or export the metrics as CSV data for your later analysis and presentation.

Eye Tracking Analysis beyond limits

Just by combining these two components, Blickshift Analytics will take your eye tracking analysis to a whole new level. It will make your daily work more productive, faster and most importantly, you will be able to get much more insight into your participants’ eye movement behaviour in the same or even less time than today.

So, if we have piqued your interest, click on the Get Your Trial button and experience the feeling of eye tracking analysis without limits!